CPUs are the brains of a computer handling connections and all the processes involved in running your computer.

While this remains true even today, one doesn't attain a full picture of the basic processing in a modern computer.

Over the past decade, another rapidly improving component of a modern computer which is just as important or even more so is the GPU.

I'm not a gamer so I don't need GPUs. This is a common misconception that is spread among less technically-inclined people. One might hear that "GPUs are a waste of money and only used for gaming." This is definitely not the complete story and a classic case of over-simplification. A GPU or GRAPHICS PROCESSING UNIT is used for displaying video output and will be found on all modern devices capable of the same.

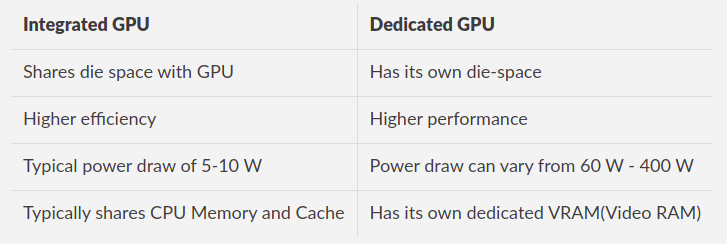

Integrated GPUs (iGPUs) share die-space with your processor and are found baked into most desktop processors and all mobile processors. Yes, your thin and light laptop with an Intel CPU, contrary to common myths, has a GPU too.

Intel HD or Iris graphics are among the common names for Intel's iGPUs. Even the Adreno on your fancy Android phone is an iGPU baked into your Snapdragon processor

Dedicated GPUs(dGPUS) are physically separate from the die-space of your CPU and communicate with the CPU via PCIE lanes.

Due to having a physically separate die (this allows the manufacturers to have a higher degree of freedom while choosing the die size, no. of cores and other factors), we see larger variations in power draw as well as performance.

PCIE or Peripheral Component Interconnect Express, determines the devices that are attached or plugged into the motherboard. It identifies the links between each device, creating a traffic map and negotiates the width of each link.

In simple terms, one could describe them as highways with a direct connection from your computer parts to your CPU. The lanes can also be visualized as highway lanes. Higher bandwidth for more lanes, just like more traffic can flow on more lanes.

Since dedicated GPUs are bandwidth-heavy compared to other components like USBs or storage drives, we usually allocate them with 8 or 16 lanes.

Here is a small table, containing the maximum bandwidth of various PCIE generations.

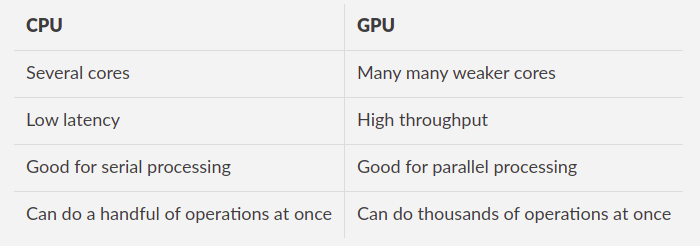

Now, let's have a look at the fundamental differences in the processing approach of a GPU compared to a CPU.

A CPU consists of a few powerful cores (relatively speaking) with a lot of cache memory that is good at handling sequential tasks but not as good at conducting multiple tasks simultaneously. A GPU on the other hand consists of many more simpler cores which are individually weaker and capable of fewer instructions than a CPU core but together can perform a task optimized for parallel processing much faster than a CPU.

This makes them ideal for graphics, where textures, lighting and the rendering of shapes have to be done at once to keep images flying across the screen.

I suggest that you take a look at this short video for an interesting depiction of the idea presented above.

AI has also made rapid strides thanks to the parallel processing nature of GPUs.

They have become key to a technology called “deep learning.” Deep learning pours vast quantities of data through neural networks, training them to perform tasks too complicated for any human coder to describe.

VRAM or Video Random Access Memory acts as a buffer between the CPU and the GPU. It stores the data of all the images and videos the GPU generates. It is also referred to as a frame buffer.

If the system does not have a sufficient amount of VRAM, the textures and images you are trying to load can cause GPU to overflow on the system’s RAM. This will cause poor performance due to the increased latency.

More VRAM is better but may not necessarily indicate better performance between different GPUs.

We can certainly see that GPUs are here to stay.

However, due to having better performance than CPUs only in optimized or specific scenarios, one can conclude that the two complement each others' weaknesses rather than outright replacing the other.